If you’re here, then there’s a high chance you already know the importance of good backups and what they mean to a serious business.

If you’re here, then there’s a high chance you already know the importance of good backups and what they mean to a serious business.

Taking care of database backups is an important and very sensitive job.

This is why we tried to make a shortlist of some backup best practices that you should be aware of when you’re either doing backups or you’re trying to restore them, in order to be covered for any of the common and slightly uncommon situations involving backups.

Backup best practices, a shortlist

This shortlist will hopefully be a great step forward for you and for your backups. If you find anything in this list that you’re already doing, then good for you!

However, if you know any best practices that you think are missing from this list, please leave a comment and we will consider it and possibly add it to our list. This way we will contribute to the knowledge “database” about backups, which might someday help someone else in your position.

-

Backups should not be on the same physical storage device as your database files

So, as you might suspect, having the database backup on the same physical drive as the database itself is not a particularly good idea, because if something bad happens to the drive then you lose both your database and your backup.

This is why one of the first tips is to put your backups on different storage, as far as possible from the database it backs up.

It might look like a simple and obvious thing to do, but in case you’re not doing it, you should, because it could save you a lot of work.

-

Set up schedules to automate your backups

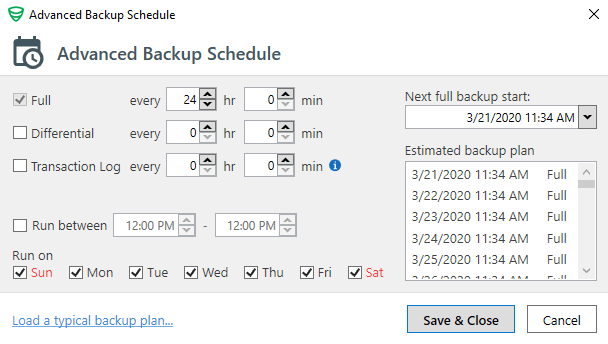

The next step in prevention and backup safety and reliability is to set up (automate) the backup process through the use of schedules.

Creating such schedules is important because, with the passing of time, your backups get older and out of date. Also, with time, there is a higher risk of data loss.

Keep yourself protected and be sure that you always have at hand a way to reestablish your data up until the point where the database failed.

These schedules will provide you an exact history of your data with the frequency you specify, depending on the business needs of your application or company, the importance of your data or other particular factors.

-

Use a test server to verify the restore procedure of your backups

You might think your data is safe if the backup process has finished successfully, but there is still another step that has to be done before you can safely say you have backed up your data — the restore process.

Without going through the entire procedure of recovering your data, through the restoring process, you cannot be sure that you will still be able to access it. Other factors, such as media corruption, may have occurred, meaning that you cannot retrieve your data after backup.

Another item in the list of backup best practices is to, on a test server, frequently do a restoring test with all of the options you are going to use in a real-life scenario. This will minimize the chances that you are caught off guard in the event of an unfortunate situation.

-

Test your recovery strategies

The next bullet point in the backup best practices list is in regard to recovery strategies.

The success of a company is determined by its ability to quickly respond to changes. Although backup strategies are created to cover a full array of events, with time, they might become out of date.

This is why, periodically, you should take the time and go over all of the scenarios and see if there are any new elements that should be introduced in your specific strategy.

These scenarios should take into consideration both the impact on your individual database restores and also on the backups of your system databases.

-

Use all available verification options during the backup process

One more tip we can give about backup best practices is that you should be using all of the available verification options when you are doing your backups. This will help you be sure that all of the backups that you create will be created correctly and will be consistent from a transactional point of view.

Now, depending on how you prefer to do your backups, whether it’s with T-SQL, through a wizard or another application, there are multiple options.

For example, if you are using T-SQL:

- Make sure that when you write your BACKUP command you also include the CHECKSUM condition in the WITH clause. This will force the operation to verify that each page of data written to the backup is passed through a checksum operation to ensure consistency on the backup media. A sample query would be like this:

BACKUP DATABASE MainDB TO DISK = 'X:\Backups\MainDB.bak' WITH CHECKSUM;

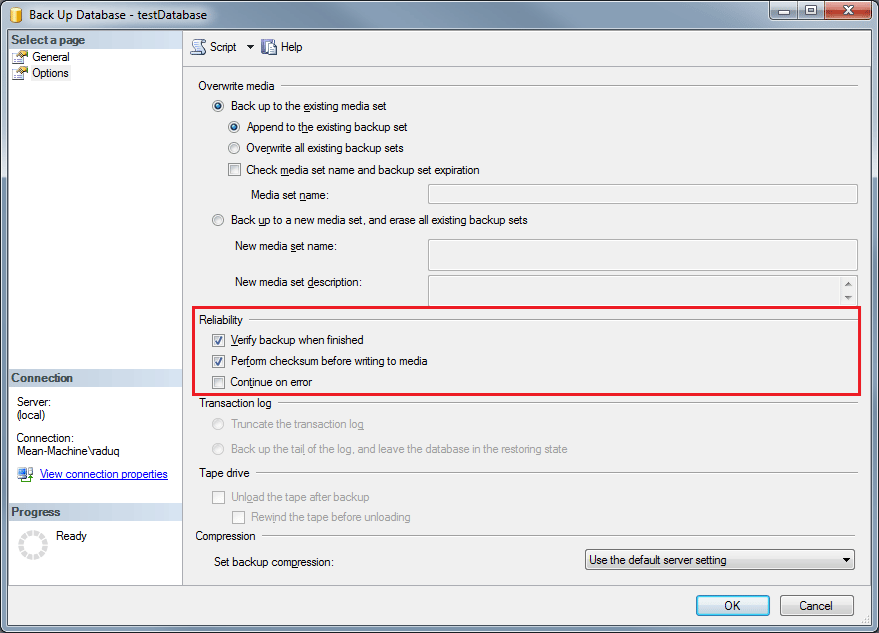

- In the case you’re using a visual interface, for example, SSMS (SQL Server Management Studio), make sure to have ticked the first two options in the Reliability section:The options, verify backup when finished, and perform checksum before writing to media, are going to act as an additional step of verification in the backup workflow and will guarantee that your backup is consistent, along with the data in it.

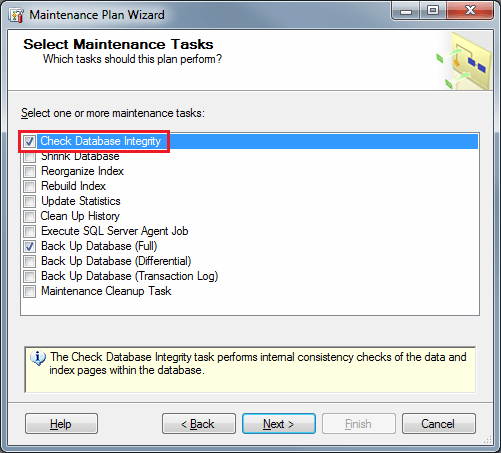

A different approach of making backups would be through SQL Server Maintenance Plans. Here you will have to tick a single checkbox, the option for Database Integrity.

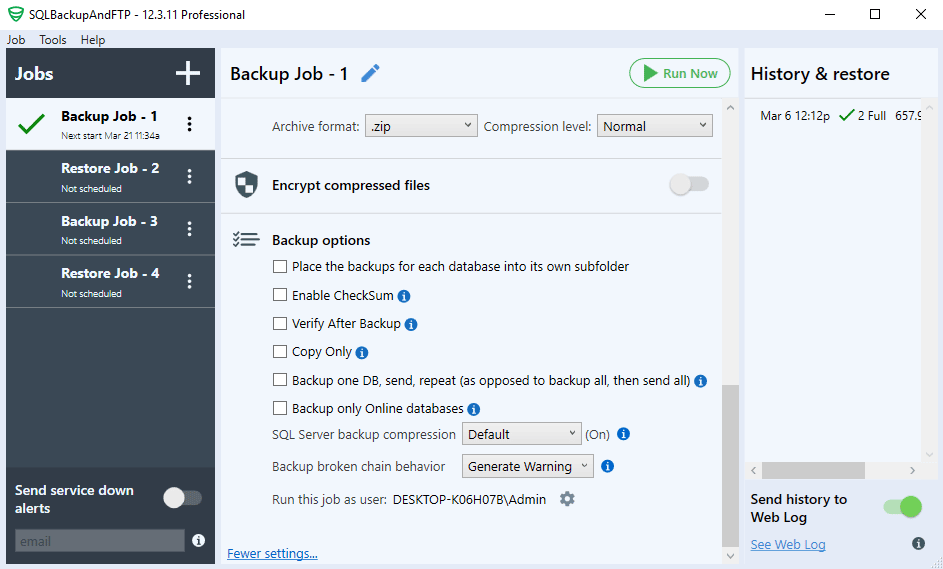

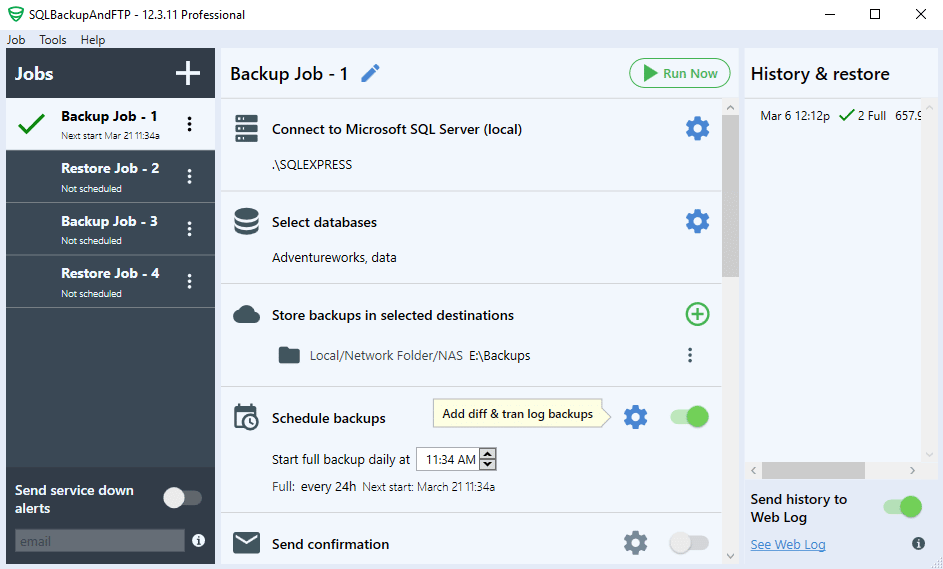

If all of the above options seem to be for any reason out of hand for you, then we recommend using another piece of software, SQLBackupAndFTP. It is a very very simple tool to use and it has all of the most important options.

Now, we’re just over halfway through our list, and if you have started to incorporate any of these practices in your backup process, then you will surely have skipped yourself a few headaches in the future.

Now, continuing with our list, the next items in our backup best practices list are:

- Make sure that when you write your BACKUP command you also include the CHECKSUM condition in the WITH clause. This will force the operation to verify that each page of data written to the backup is passed through a checksum operation to ensure consistency on the backup media. A sample query would be like this:

-

Doing a full backup daily

This might sound like a bit too resource-intensive operation, but it is the best way to prevent any data loss.

Now, depending on the business needs of your organization you should pick a recovery model (more details here) that will be your organization’s first line of protection against data loss. If your organization can accept to lose 15, 30 or 60 minutes worth of data, then you should go for a simple recovery model.

Most cases would require you to do this full backup on a daily basis, but despite the overhead added by the time and occupied resources for such a process, the guarantee that your data is safe is, in our opinion, worth it.

If the organization you’re doing backups for has small databases with data that is easily recoverable, then a full backup should definitely be the correct way to go.

As we have already mentioned SQLBackupAndFTP earlier, if you have been curious and downloaded it, you will be surprised to see that it has, by default, set its backup schedule to do a full backup every 24 hours.

This is very helpful, as this way there is no more risk that a detail that is most important will be overlooked.

This is very helpful, as this way there is no more risk that a detail that is most important will be overlooked.If you are to incorporate just this item from our list of backup best practices, then you have a great advantage as the recovery is easier if the backup is just one file and no database logs are needed.

However there is a downside to this as data can only be recovered up until the date when the backup was created and, depending on your database size, the backup can occupy a lot of disk space.

-

Doing differential backups more frequently

We just said that you should consider doing full backups on a daily basis, but even if you do not do it every day, differential backups should be done even more frequently than full backups.

Differential backups are not so resource hungry and data loss is reduced even more if they are done on a frequent basis, after having done a full backup.

In terms of advantages, differential backups will take less time to complete and also less disk space as they will contain only the data that has been created since the last full backup. No database logs are needed in this case either and like we’ve said before, data will be more up to date.

The disadvantage of this backup type is also the fact that data can be recovered only up until the date when the last differential backup was created and the restore process of the data will be slightly more complicated as now there are two files that have to be incorporated into the restore process.

-

Do transaction log backups even more frequently

The following item in our list of backup best practices is one that leads the full and differential backups in terms of the frequency it should be made.

Because transaction logs actually contain all the recent activity that has occurred in the database, they can be used to make a restore of the database to a specific point in time, and this is their biggest advantage!

By doing a transaction log backup you also truncate the log and this way you keep it from becoming full. Also, transaction log backups can be performed while the system is working.

If the frequency of new data that is created in your database is very high, then we recommend doing a transaction log backup every 10 minutes, while for other databases that are less active doing such a backup every 30 or 60 minutes should be sufficient.

The downside of this backup type, however, is that the database has to have transaction logging activated which will increase the size of the database and will also increase the effort when doing a restore of a transaction log.

-

Back up system databases as well

You might think that having a backup strategy with all of the points mentioned above is more than enough to protect your organization from data loss, but it is not.

A backup strategy is incomplete if you do not have a backup plan for your SQL Server system databases, master, model and msdb.

These databases are absolutely essential because they contain system configuration and also the SQL Server job information which will need to be restored in case of a total system restore.

You should make a strict plan of backing up your system databases, at least as frequently as your full backups, and at least daily if you’re frequently changing instances. But in the case where your installation is more stable, you should do this less frequently.

If up until this point you still haven’t considered taking a look at SQLBackupAndFTP for your backup process, there is still some good news that might make you change your mind.

As you can see in the screenshot above, you have the possibility of also backing up your system databases, along with your user databases, with just a few clicks. You can still back up these tables within a schedule and add the backups to your specified location either on the same disk or on a different machine. -

Backup your most sensitive data to tape

This might not be a very common situation, but if your organization wants to be fully protected against data loss, then be sure to back up the data that it cannot afford to lose to tape.

Tape is much more resistant to physical shock than disk drives and due to this, it has a major advantage. Just imagine a situation where you drop a box full of disk drives containing the last three months worth of data down the stairs. At that very second, you lose three months worth of data, but with tape drives, you can pick the tapes up, and continue on.

Because there are no magnetic and nearly no electrical components in the drive, it uses less power than a conventional disk drive.

They are less sensitive to wear, as general media life for tapes are around 20 to 30 years and the lifespan of the drive is around 10 years.

So in conclusion, with tape you get:

- Very long media lifetime (20–30 years)

- Very easy to transport

- Lower power consumption

- Higher reliability

- A long lifetime for the drive (around 10 years)

Today’s tapes, which are the 5th generation, LTO-5 have a high speed at around 140 MB/sec and can store around 1.5 TB of uncompressed data, which is still pretty much sufficient for today’s needs.

In conclusion, if you start incorporating at least a few of these best practices in your backup process, you will eventually find the best combination of tools and tricks that will make your process more efficient and your data will be safer.

We recommend using SQLBackupAndFTP as it helps you cover most of the important steps above very fast so you can be sure you will not have problems with your backups in the future.

In any way that you choose to implement these tips, please share the knowledge. Share this post in order to help others that might not be aware of what they could do to improve their backup process and get rid of future headaches.

Thanks for sharing this wonderful article. I use Drop Box and cloud drive for backups. Do you recommend something in particular and better than them? Would love to read an article on such suggestions 🙂

Hi! This is a good suggestion and I have added it to my list. Please return in a couple of days when the article will be posted.

Thanks for sharing this wonderful article. I use Drop Box and cloud drive for backups

I don’t fully understand the SqlBak scheduler – how it should be set up to make full backup each weekend, differential backup each day of the week and transactional log backup each hour?

thanks for sharing i recently had issue that the sql server agent stopped running after a server restart so we did not have the backup when needed so it would be a good practice to add a verification system to check if the backups are being done

Thanks for a good and informative article!

I agree SQLBackupAnFTP is a great tool for quickly setting up backups!

Actually I have an issue right now where my Diff-backups are failing, but Full and Log backup are working fine.

ERROR: Cannot perform a differential backup for database “”, because a current database backup does not exist. Perform a full database backup by reissuing BACKUP DATABASE, omitting the WITH DIFFERENTIAL option.

BACKUP DATABASE is terminating abnormally.

This is a bit strange, since the FULL backup is working fine, and this error on DIFF backups has recently started without no obvious reason.

I use a standard backup strategy from SQLBackupAndFTP with Full-24hr/Diff-4hr/Log1-1hr

Any ideas to share?

I will try to issue a full backup manually, and see if the problem disappears.

Nice text, thanks!

It’s an amazing post recommending of SQL best practices. Also, the explanation is detailed and easy to grasp. Thanks.

Under transaction log backup you state “The downside of this backup type, though, is that the database has to have transaction logging activated” Since all SQL DB have a transaction log, do you mean that the DB Recovery model must be set to Full or Bulk-logged rather than simple? The size of your transaction log is dependant on how much data is changed/added and how frequently it is backed up so implementing transaction log backups should not increase the size of the database.

Thanks for a good and informative article!

I agree SQLBackupAnFTP is a great tool for quickly setting up backups